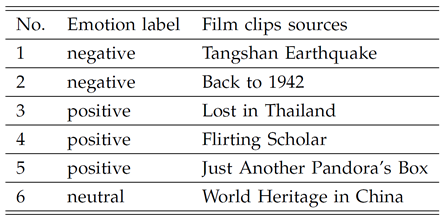

Stimuli and Experiment

Fifteen Chinese film clips (positive, neutral and negative emotions) were chosen from the pool of materials

as stimuli used in the experiments.

The selection criteria for the film clips are as follows:

(a) the length of the whole experiment should not be too long in case it will cause the subjects to have fatigue;

(b) the videos should be understood without explanation;

(c) the videos should elicit a single desired target emotion.

The duration of each film clip is approximately 4 minutes.

Each film clip is well edited to create coherent emotion eliciting and maximize emotional meanings.

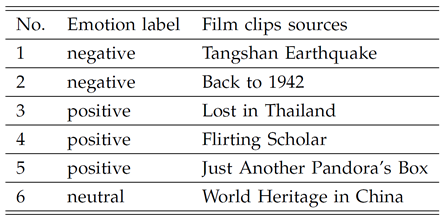

The details of the film clips used in the experiments are listed below:

|

|

|

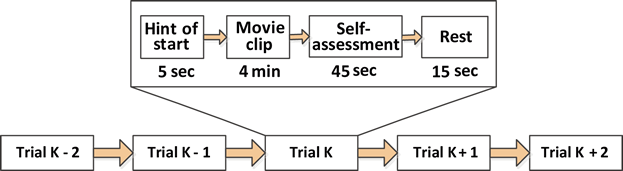

There is a total of 15 trials for each experiment.

There was a 5 s hint before each clip, 45 s for self-assessment and 15 s to rest after each clip in one session. The order of presentation is arranged in such a way that two film clips that target the same emotion are not shown consecutively. For feedback, the participants were told to report their emotional reactions to each film clip by completing the questionnaire immediately after watching each clip. The detailed protocol is shown below:

EEG signals and eye movements were collected with the 62-channel

ESI NeuroScan System and

SMI eye-tracking glasses.

The experimental scene and the corresponding EEG electrode placement are shown in the following figures.

Subjects

Fifteen Chinese subjects (7 males and 8 females; MEAN: 23.27, STD: 2.37) participated in the experiments.

To protect personal privacy, we hide their names and indicate each subject with a number from 1 to 15.

1st-5th and 8th-14th subjects (12 subjects) have EEG and eye movement data while the 6th, 7th and 15th subjects only have EEG data.

Dataset Summary

The SEED consists of two parts: SEED_EEG and SEED_Multimodal. SEED_EEG contains EEG data of 15 subjects. SEED_Multimodal contains EEG and eye movement data of 12 subjects.

The indexes of subjects are the same in these two parts. For example, if the index of one subject in SEED_EEG is 1st, the index of this subject in SEED_Multimodal is also 1st.

- SEED_EEG

-

In the "Preprocessed_EEG" folder, there are files that contain downsampled, preprocessed and segmented versions of the EEG data in MATLAB (.mat file).

The data was downsampled to 200 Hz.

A bandpass frequency filter from 0 - 75 Hz was applied.

We extracted the EEG segments that correspond to the duration of each movie.

There were a total of 45 files with the extention .mat (MATLAB files), one per experiment.

Each subject performed the experiment three times with an interval of approximately one week.

Each subject file contains 16 arrays.

Fifteen arrays contain segmented preprocessed EEG data of 15 trials in one experiment (eeg_1~eeg_15, channel×data).

Array name labels contain the label of the corresponding emotional labels

(-1 for negative, 0 for neutral and +1 for positive).

The detailed order of the channels is included in the dataset.

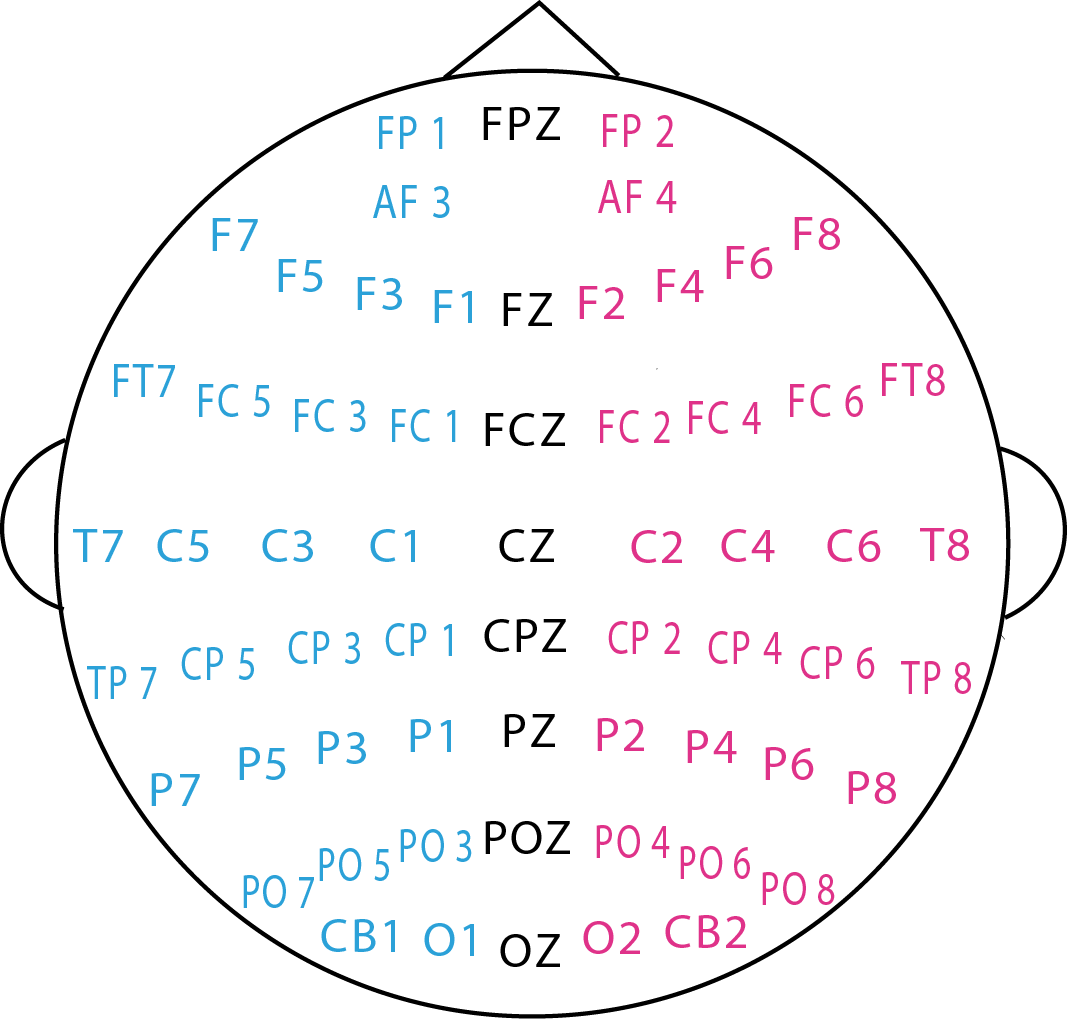

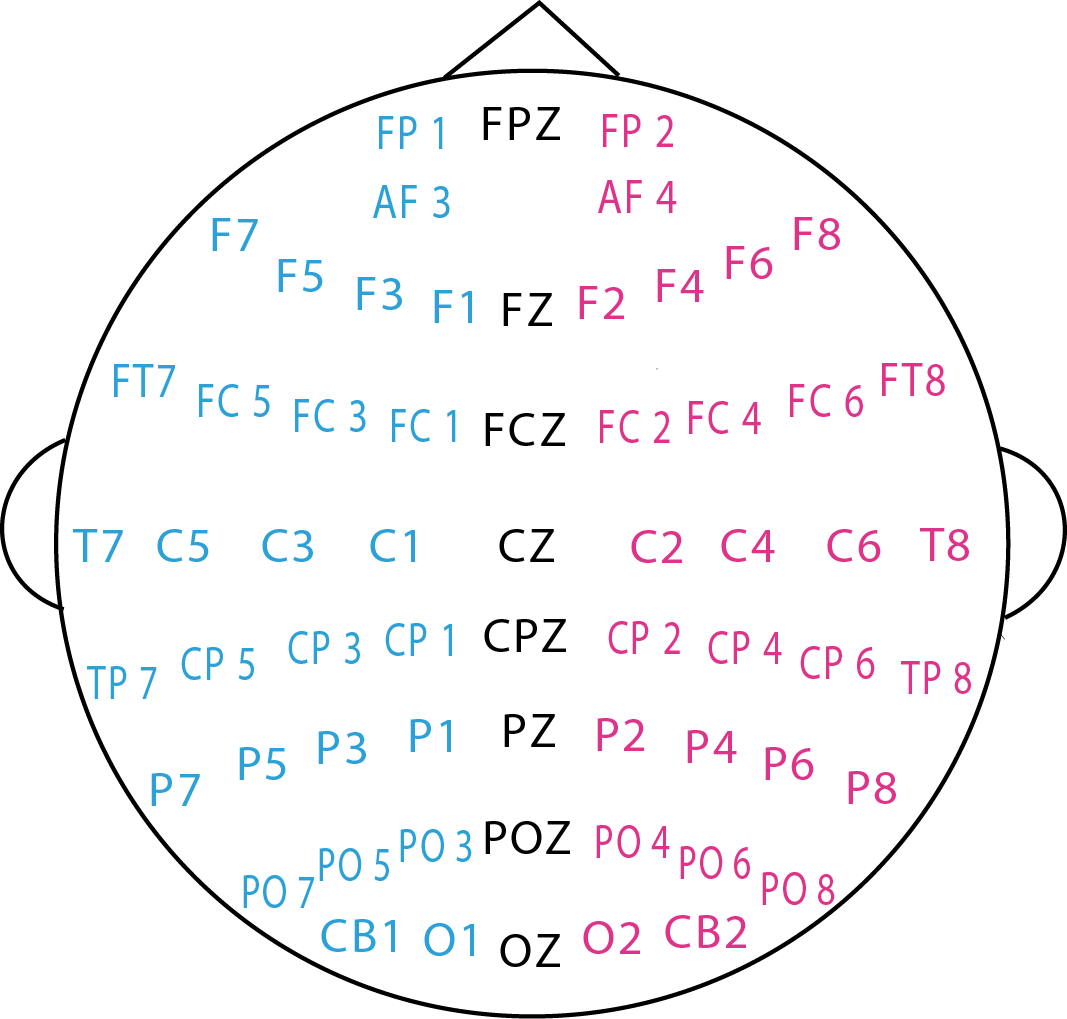

The EEG cap according to the international 10 - 20 system for 62 channels is shown below:

-

In the "Extracted_Features" folder, there are files that contain extracted differential entropy (DE) features of the EEG signals,

which was first proposed in [2].

These data are well suited to those who want to quickly test a classification method without preprocessing the raw EEG data. The file format is the same as the Data_preprocessed. We also computed differential asymmetry (DASM) and rational asymmetry (RASM) features as the differences and ratios between the DE features of 27 pairs of hemispheric asymmetry electrodes. All of the features were further smoothed with a conventional moving average and linear dynamic systems (LDS) approaches. For more details about feature extraction and feature smoothing, please refer to [1] and [2].

- SEED_Multimodal

- ‘Chinese’ folder contains four subfolders.

- 01-EEG-raw: contains raw EEG signals of .cnt format with sampling rate of 1000Hz.

- 02-EEG-DE-feature: contains DE features extracted with 1-second and 4-second sliding window and source code to read the data.

- 03-Eye-tracking-excel: contains excel files of eye tracking information.

- 04-Eye-tracking-feature: contains eye tracking features in pickled format and source

code to read the data

- ‘code’ folder contains source code of models used in this paper.

- SVM, KNN, and logistic regression source code.

- DNN source code.

- Traditional fusion methods.

- Bimodal deep autoencoder.

- Deep canonical correlation analysis with attention mechanism.

- The source code is also available on GitHub.

- ‘information.xlsx’: contains information of experiments and subjects.

- For movie clips, positive, negative, and neutral emotions are labeled as 1, -1, and 0

respectively.

- To build classifiers, we used 2, 0, 1 for positive, negative, and neutral emotions,

respectively (i.e., movie clip label plus one). Besides, we did not save labels in eye

tracking features since the eye tracking labels are the same as labels for EEG DE

features.

Download

Download SEED

Download SEED

References

If you feel that the dataset is helpful for your study, please add the following references to your publications.

1. Wei-Long Zheng, and Bao-Liang Lu, Investigating Critical Frequency Bands and Channels for EEG-based

Emotion Recognition with Deep Neural Networks, accepted by IEEE Transactions on Autonomous Mental Development

(IEEE TAMD) 7(3): 162-175, 2015.

[link]

[BibTex]

2. Ruo-Nan Duan, Jia-Yi Zhu and Bao-Liang Lu, Differential Entropy Feature for EEG-based Emotion Classification,

Proc. of the 6th International IEEE EMBS Conference on Neural Engineering (NER). 2013: 81-84.

[link]

[BibTex]