SEED Dataset

A dataset collection for various purposes using EEG signals

Stimuli and Experiment

|

The duration of each film clip is approximately 1 to 4 minutes. Each film clip is well edited to create coherent emotion eliciting and maximize emotional meanings.

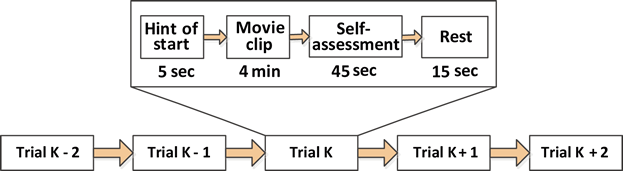

Each subject took part in the experiments three times and for each time, there were 21 trials. There was a 5 s hint before each clip, 45 s for self-assessment and 15 s to rest after each clip in one session. The order of presentation is arranged in such a way that two film clips that target the same emotion are not shown consecutively. For feedback, the participants were told to report their emotional reactions to each film clip by completing the questionnaire immediately after watching each clip. The detailed protocol is shown below:

|

|

Subjects

Eight French subjects (5 males and 3 females; MEAN: 22.5, STD: 2.78) participated in the experiments. To protect personal privacy, we hide their names and indicate each subject with a number from 1 to 8.Dataset Summary

The details of SEED-FRA consists are shown below:- ‘French’ folder contains four subfolders.

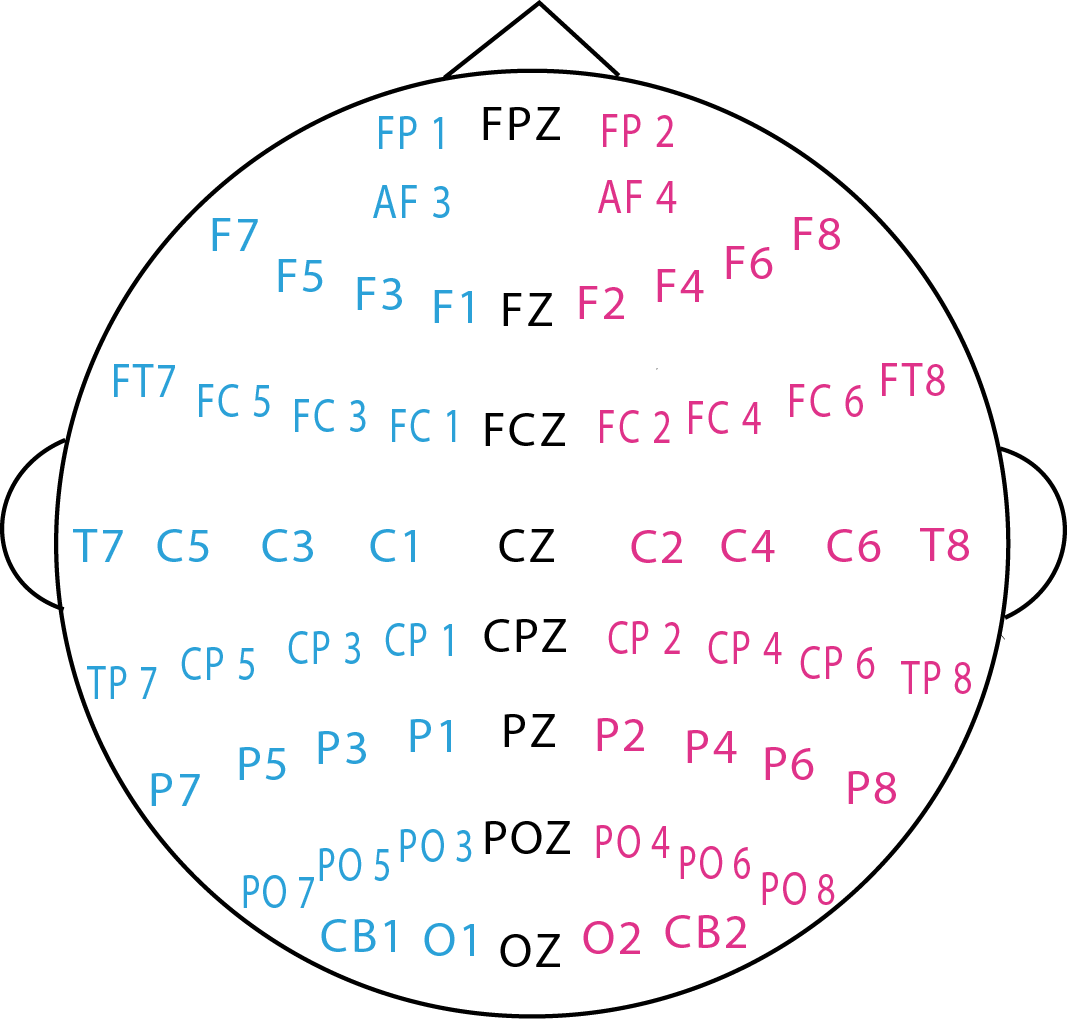

- 01-EEG-raw: contains raw EEG signals of .cnt format with sampling rate of 1000Hz.

- 02-EEG-DE-feature: contains DE features extracted with 1-second and 4-second sliding window and source code to read the data.

- 03-Eye-tracking-excel: contains excel files of eye tracking information.

- 04-Eye-tracking-feature: contains eye tracking features in pickled format and source code to read the data

- ‘code’ folder contains source code of models used in this paper.

- SVM, KNN, and logistic regression source code.

- DNN source code.

- Traditional fusion methods.

- Bimodal deep autoencoder.

- Deep canonical correlation analysis with attention mechanism.

- The source code is also available on GitHub.

- ‘information.xlsx’: contains information of experiments and subjects.

- For movie clips, positive, negative, and neutral emotions are labeled as 1, -1, and 0 respectively.

- To build classifiers, we used 2, 0, 1 for positive, negative, and neutral emotions, respectively (i.e., movie clip label plus one). Besides, we did not save labels in eye tracking features since the eye tracking labels are the same as labels for EEG DE features.

Download

References

If you feel that the dataset is helpful for your study, please add reference [1] to your publications.

1. Wei Liu, Wei-Long Zheng, Ziyi Li, Si-Yuan Wu, Lu Gan and Bao-Liang Lu, Identifying similarities and differences in emotion recognition with EEG and eye movements among Chinese, German, and French People, Journal of Neural Engineering 19.2 (2022): 026012. [link] [BibTex]

2. Schaefer A, Nils F, Sanchez X and Philippot P, Assessing the effectiveness of a large database of emotion-eliciting films: a new tool for emotion researchers, Cognition and Emotion 24.7(2010):1153-1172. [link] [BibTex]