Experiment Setup

The emotion induction is based on video clips. Twelve clips were selected for each emotion

(except neutrality). The neutral emotion comprised eight clips, resulting in a total of 80

video clips. Each video clip lasted for two to five minutes, and the total duration of all

the clips was approximately 14,097.86 seconds.

|

|

|

|

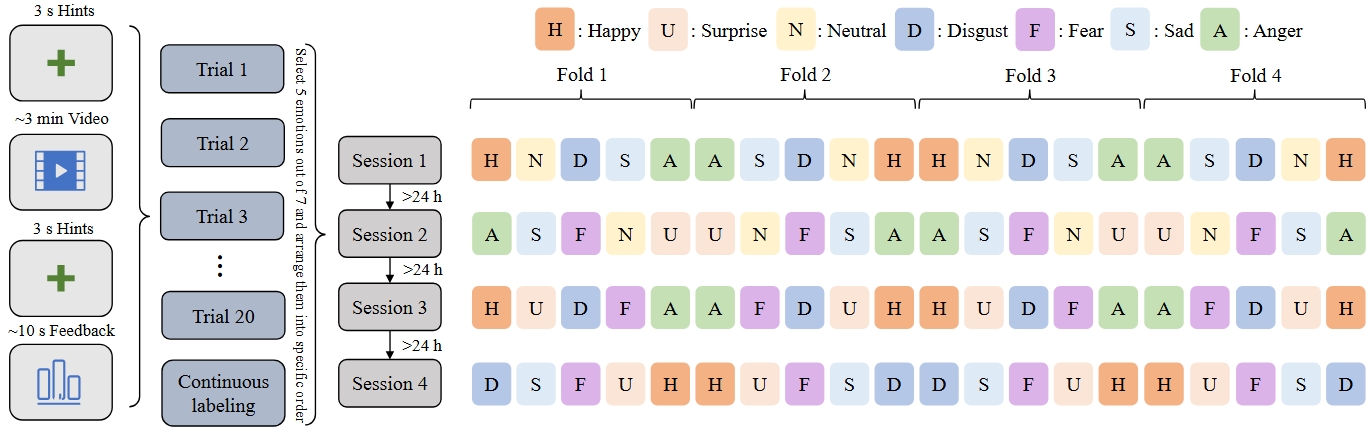

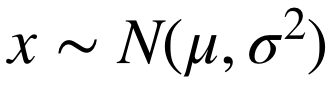

All of the subjects underwent four experimental sessions. The procedure of the experiment

is illustrated in the figure below. Twenty trials were included in each session; each trial

consisted of two parts, where the first part involved watching videos and the second part

involved self-assessment. For each session, only five out of seven emotions were elicited, which reduced the impact of

subjects switching into too many emotional states. Prior to and following the presentation

of each video clip, a 3-second countdown was provided to alert participants to the imminent

start or end of the video. The sequence of video clips presented in the figure below was

carefully arranged to avoid sudden emotional valence shifts, as human emotions tend to

transform gradually.

Eighty video clips acquired from four sessions in total were divided into four folds. Each

fold contained five clips from each session, and all the emotional videos were equal in

number. At the conclusion of each session, the participants were instructed to review all

twenty video clips, recall the emotional responses they experienced during the session and

assign continuous labels to the entire session via a mouse wheel. The subjects were free

to choose to review in real-time or speeded time.

|

|

|

|

In the self-assessment section, the subjects were asked to score according to the induction effect

of the stimulus material. The scoring range was 0-1, of which 1 represented the best

induction effect and 0 the worst. If the participant feels joy after watching the joy video,

they should be scored near 1 points, and if they do not feel anything, they should be given a score of 0.

It is worthwhile to mention that if you are watching neutral stimulus material,

if the subject's mood fluctuates, the score should be 0, and the natural state is 1 points.

Subjects

Twenty subjects (10 males and 10 females) aged 19 to 26 years (mean: 22.5; STD: 1.80) participated

in the experiments entirely with available data recorded. All participants were right-handed and

had self-reported normal or corrected-to-normal vision and normal hearing at Shanghai Jiao Tong

University. The participants were selected through the Eysenck Personality Questionnaire (EPQ), a

widely used questionnaire developed by Eysenck to assess an individual's personality traits.

Feature Extraction

EEG Features

To mitigate the impact of noise, we first visually inspect the EEG signals and interpolate

any bad channels using the MNE-Python toolbox. We then apply a bandpass filter with cutoff

frequencies of 0.1 Hz and 70 Hz to remove low-frequency noise. Additionally, a notch filter

with a cutoff frequency of 50 Hz is applied to prevent powerline interference. To reduce the

computational complexity of our method, we downsample the raw EEG signals from the original

sampling rate of 1000 Hz to 200 Hz.

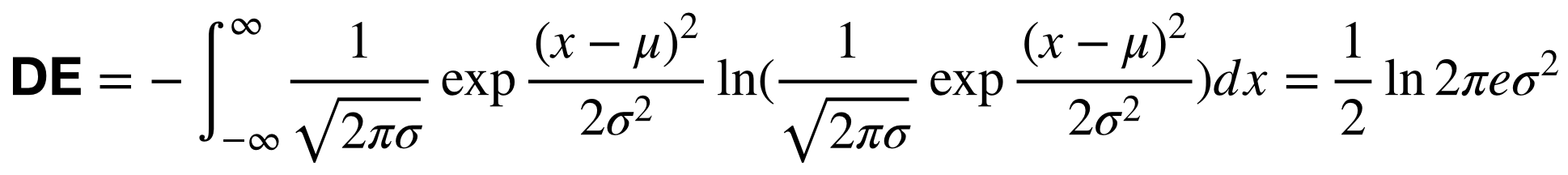

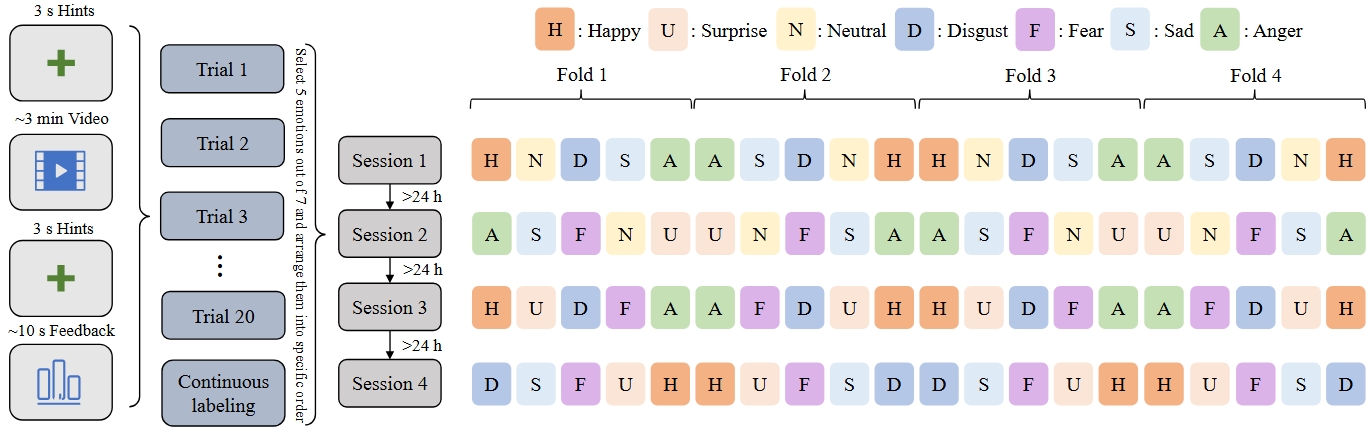

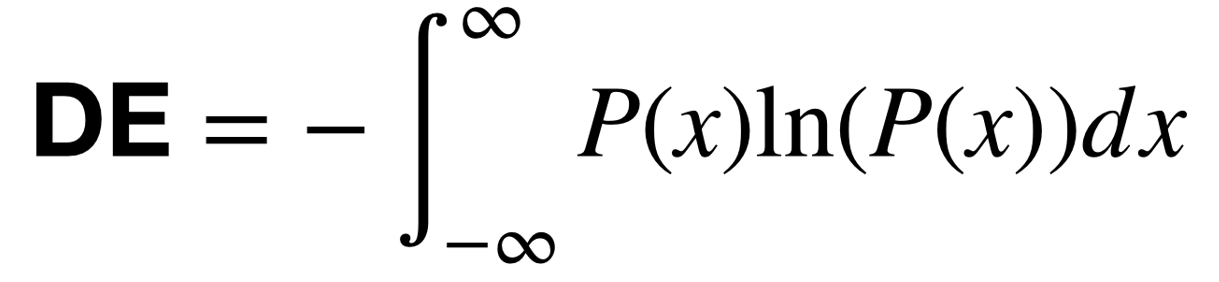

Afterward, we extract differential entropy (DE) features

within each segment at 5 frequency bands: 1) delta: 1~4 Hz;

2) theta: 4~8 Hz; 3) alpha: 8~14 Hz; 4) beta: 14~31 Hz; and

5) gamma: 31~50 Hz.

We assume that the EEG signals obey a Gaussian distribution:

.

Then, the calculation of the DE features can be simplified:

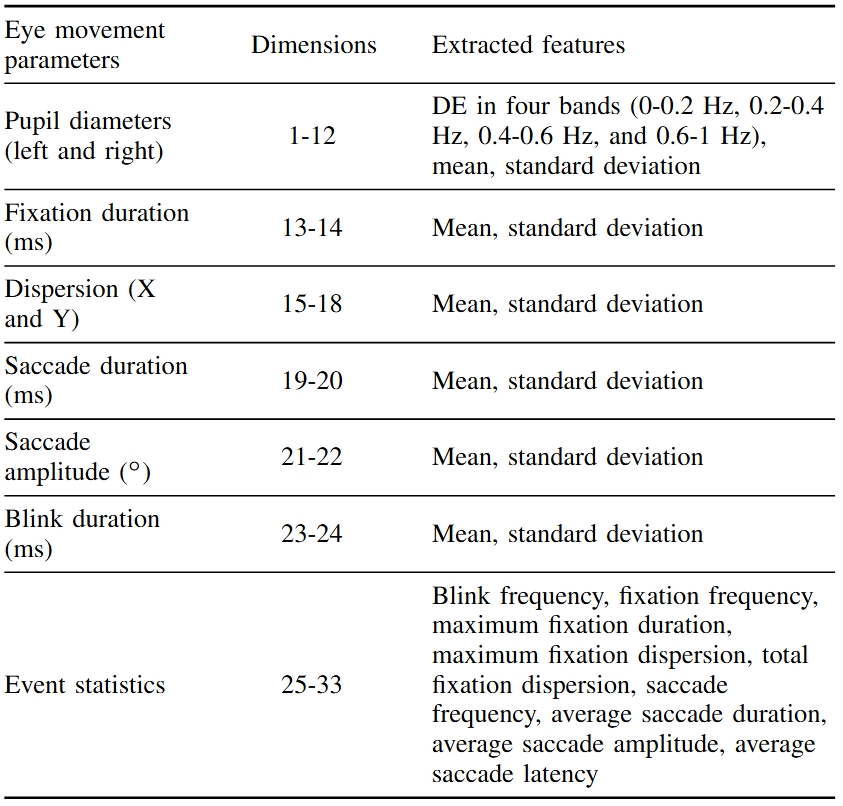

Eye Movement Features

For the eye movement information collected with the Tobii Pro Fusion eye tracker, we extracted various features from

different detailed parameters used in the literature, such as the pupil diameter, fixation, saccade, and blink.

A detailed list of eye movement features is shown below.

Dataset Summary

-

Folder EEG_features:

This folder contains DE features of 20 participants. Feature

data are named in "subjectID.mat". For example, file "1.mat"

means that this file is the DE feature for the first subject.

The keys in each .mat file are named in "de_LDS_videoID". For eample,

The key "de_LDS_1" means the DE features smoothed by LDS algorithm from the first

video trial. The key "de_80" means the DE features without smoothing from the

80th video trial.

-

Folder EEG_raw:

This folder contains raw EEG data (.cnt file) collected with

the Neuroscan device. Raw data are named in "subjectID_date_sessionID.cnt" format. For

example, "1_20221001_1.cnt" means that this is the raw data of the first

subject and first session. **Notice: session numbers are given based on the

stimuli materials watched, not based on the time. **

-

Folder EYE_features:

This folder contains extracted eye movement features.

-

Folder EYE_raw:

This folder contains tsv files extracted from the eye tracking device.

-

Folder src:

This folder contains two files. load_cnt_file_with_mne.py is an example code for loading

cnt file, preproccessing ,and cliping the EEG signals according to the triggers. channel_62_pos.locs is the

montage file for the 62-channel EEG scalp.

-

Folder save_info:

This file contains two kinds of files. The first kind of files are named in "subjectID_date_sessionID_trigger_info.csv"

format, including the start and end times of the stimulus movie clips. Specifically, trigger 1 indicates the start time of a trial

and trigger 2 indicates the end time of a trial. The other kind of files are named in "subjectID_date_sessionID_save_info.csv"

format, including the subject feedback of each movie clip. The score is 0 to 1, indicating

how successful the targeted emotion is elicited by each video.

-

File emotion_label_and_stimuli_order.xlsx:

This file contains the emotion labels and stimuli orders.

-

File subject info.xlsx:

This file contains meta-information of the subjects.

-

File Channel Order.xlsx:

This file contains the channel order for DE features.

Download

Download SEED-VII

Download SEED-VII

Reference

If you feel that the dataset is helpful for your study, please add the following references to your publications.

Wei-Bang Jiang, Xuan-Hao Liu, Wei-Long Zheng and Bao-Liang Lu, SEED-VII: A Multimodal Dataset of Six Basic Emotions with Continuous Labels for Emotion Recognition,

IEEE Transactions on Affective Computing, vol. 16, no. 2, pp. 969-985, April-June 2025, doi: 10.1109/TAFFC.2024.3485057.

[link]

[BibTex]

.

Then, the calculation of the DE features can be simplified:

.

Then, the calculation of the DE features can be simplified: